Malcolm Frank (Cognizant)

AI is already here today in little helpers we use on a daily

basis (personal assistants, next-gen GPS apps, etc).

When Deep Blue beat the human world champion in Chess, people

still belittled the achievement, and did not attribute it to AI; IBM’s Deep

blue was specifically written for the single purpose of defeating the world

champion in chess. Later, Watson

defeated Jeoperdy champions, and that was acknowledged as an improvement

because it required real time deciphering of human speech, but still, once the speech

was understood, the rest of it was a search algorithm, so people still played

the achievement down. Later, however, when

Deep mind beat the world Go champion, it was a key turning point, because Deep

Mind was a generic all-purpose AI that was taught to play Go, not a special made

application. Also, Go is the most

complex board game in existence, orders of magnitude more complex than chess,

and with no real way to brute force it.

Here we pitted 2500 years of human evolution against two years of

computer development, and the computer won.

However even after the decisive victory in Go, some people still said

that AI could only go where it could algorithmically solve problems; they said

that in games like Poker, where small human cues are important, it would not be

able to compete. However recently a

computer beat the top poker players decisively.

It turns out that short term human strategies like bluffing, or visual

cues, do not stack up to a long term algorithm and that it can adapt to catch

and learn these types of invisible cues over time.

Today, 8 out of 10 hedge funds are AI driven; Tesla’s

automated driving has accumulated over 200 million miles of driving, and the

more car Tesla sells, the more learning it accumulates. In radiology, AI identification of anomalies

like cancer has reached an accuracy of 99.6%, exceeding human accuracy of 92%. In the legal world, paralegals doing due diligence

in two weeks are replaced by machines who can do the same work in two

hours. JP Morgan Chase has put a loan review

system that has automated over 360,000 hours of what used to be human

work. Helpdesk management is being

replaced by voice recognition capable software and chatbots, and AI is proving

to be much better than humans in investment advisory, because it has none of

the biases humans have. The displacement

of humans with AI is happening already, and it’s happening rapidly.

The takeover of jobs by AI can be described as a capitalist

dream and labor nightmare. For the

capitalists, AI and automation

-

Radically lowers

operational costs

-

Improves quality (less

errors)

-

Boosts speed

-

Raises insights and meaning

previously unavailable

What happens on the other side of the equation? A

2013 Oxford study predicts that 47% of US jobs are at risk to be automated in the next one or two decades. Nevertheless, Malcolm Frank is optimistic. Why? Because if in the past technological advancement was focused on smaller job domains, now it is shifting to bigger systems: finance, healthcare, government. He sees three scenarios for humans:

- Replaced - AI takes over your job completely

- Enhanced - AI enhances your job, taking away the rote activities and leaving you to deal with higher level functions

- Invented - AI creates new jobs that didn't exist previously

Frank says 90% of the talk is around replacement, but he feels that 90% of the actual impact of AI will be in enhancement and invention. He breaks it down as follows:

- 12% of jobs will be replaced

- 75% of jobs will be enhanced

- 12% of jobs will be invented

Simpler jobs will be replaced altogether, but automation has been eliminating jobs for quite some time now. For example, the automated toll both operators replaced human toll both operators - and that was not a job anyone really wanted to do. Until now, automation replaced primarily blue color jobs; now, however, software is automating white color jobs as well, if they are rote enough.

As an example, a company called "Narrative Science" has a software that automates simple journalism. Almost all minor sports events which received small coverage in local newspapers are now no longer written by people, but rather by their software. The following article was written just by entering the play information into their story writing software:

|

| Story written by Narrative Science software |

A guideline he gave for whether your job is at risk is this: do you sit in a large cubicle farm? If so, you job is at risk.

Most Jobs, however, are a sum of multiple tasks: some of the tasks will be automated, but some will not. For example, lawyers:

Some of these tasks can be automated (examining legal data, research prepare and draft legal documents) but others are not so easy (present cases before clients and courts, gather evidence, etc.). Again, this is nothing we are not used to today already. A Taxi driver uses GPS for navigation, Credit card reader to automate payment taking, and possibly an app for a ride hailing services like Get Taxi.

Generally speaking, you could describe a "periodic table" of jobs as follows:

|

| The jobs on the left will be automated; the ones on the right are enhanced. |

People will do the "art" of a job; machines will do the "science" of the job. Examples of area of augmentation:

And it's not as though our institutions do not have vast room for improvement. Healthcare, for example, is a very inefficient, very bureaucratic industry, with so many activities not related to actual health (forms, returns, appointments, questionnaires, reports, etc). Some of the improvements that can be expected in healthcare include:

What about new jobs? Frank describes what he calls the Budding Effect. Edwin Budding invented the lawnmower in 1827. The ability to mow lawns to an even height vastly improved the ability to play sports, opening the door to a huge sporting industry which was previously limited or not possible. A more striking example of the Budding Effects are the invention of ways to generate and deliver electricity, which enabled communication, radio, TV, and other countless industries, none of which could have been imagined when the initial inventions for generating electricity were made. Frank quotes W. Brian Arthur who

wrote in the McKinsey Quarterly that by 2025, the second economy created (the digital economy) will be as large as the entire 1995 physical economy (interesting note is that Arthur does acknowledge that jobs will be gone in the second economy that will not be coming back, and sees lack of jobs as a problem that needs to be addressed; he says that the focus should be less on job creation and more on wealth distribution).

Where will new jobs be created? These are some good candidate domains:

Wellness - as mentioned previously, the current healthcare system is operating very poorly. We will be seeing a shift to patient central care, rather than doctor centered care. Furthermore, AI will be able to predict when we will likely be unwell or get sick, so we will be able to take preventative measures in advance and reduce overall illness altogether.

Biotechnology - will be bigger than IT by 2025

VR and AR - will grow to similar size of the current movie industry today. VR is predicted to be a bigger business than TV by 2025; Tim Cook (Apple CEO) predicts that AR will be bigger than VR in 10 years.

The Experience economy - people with time on their hands will seek more vacation, and beyond just going places they will want to experience things. Air B&B is planning to move away from providing lodging to providing experiences: want to live in a medieval castle, or relive the renascence, or experience what it was like to be a pioneer? Specialized experience packages will enable expanding tourism to different levels altogether (a-la Westworld, hopefully without the murderous robots).

Smart infrastructure - smart buildigs, roads, and so on. The money for projects like these comes from automation - when self driving cars eliminate the majority of car accidents, this will lead to a savings to the economy in the US alone of $1 Trillion (as point of comparison, all federal tax revenue is $1.7 Trillion).

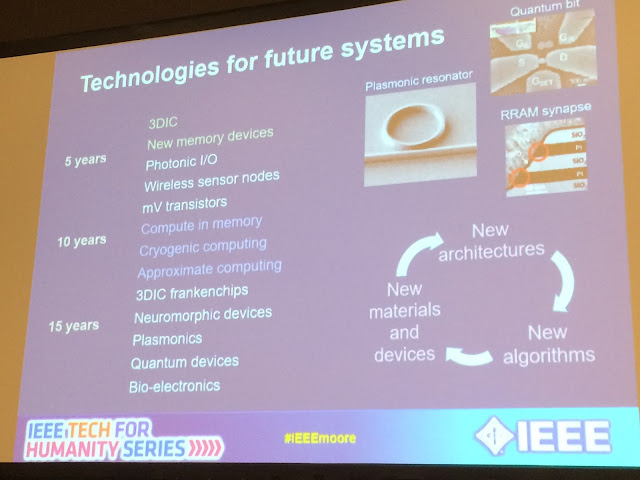

Next gen IT - Cyber security, quantum computing - these are emerging domains that will require jobs as well.

In summary, says Frank, never short human imagination - human wants and desires are limitless, and these will lead to things for us all to do.